Artificial intelligence. It’s a topic that’s hard to ignore. Since the launch of ChatGPT in November 2022, anyone with an internet connection has had access to an innovative chatbot that can provide answers, summarize texts, and offer translations within seconds. The tool was quickly embraced by employees and students alike, but critical voices are raising concerns about the ethical aspects of artificial intelligence. What is the impact of AI on gender equality—and should we be worried?

(Unconscious) Bias

The internet is full of gender stereotypes. In the endless stream of online information, every opinion and worldview can be found. While some call for the return of the 1950s family with women financially depend on their spouse, others passionately advocate for recognition of structural inequality and feminist action. Which type of information that is selected for the creation of AI is crucial. Artificial intelligence models are trained based on the information available on the web. Depending on what data is used, existing stereotypes can be replicated—and even reinforced.

Amazone conducted a small test and asked ChatGPT to generate two images: one of a nurse and one of a CEO. Although we used explicitly neutral terms, ChatGPT produced stereotypical images of both professions—depicting a woman as a nurse and a man as a CEO.

Image: ChatGPT pictures a CEO as male and a nurse as female. © ChatGPT.

But it doesn’t stop there. Stereotyping in AI can also have direct negative consequences on women in their careers and daily lives. In 2018, online retailer Amazon developed an AI tool for screening job applications. The aim was to automate an initial selection of candidates based on successful employee profiles. However, the algorithm was trained on male resumes, and as a result, the system automatically excluded women as potential candidates. After a thorough audit, the system was scrapped—but it illustrates the real dangers of uncontrolled AI.

By Men = For Men

Research also shows that women themselves use AI less frequently. In October 2024, American research revealed that women use artificial intelligence at work on average 25% less than men. They expressed concerns about the ethical use of AI and feared they would be more harshly judged for using it in the workplace. Many were afraid of being perceived as less competent and too dependent on the technology.

This inequality in AI usage not only affects women’s productivity and job market opportunities but also impacts the data AI learns from. The algorithm continues to train itself based on the information entered into the chatbox. With fewer female users, the system is more likely to develop further bias, creating a system that is made by men, for men. If it only learns from their questions and requests, gender inequality in this type of technology will only increase.

Environment and Ethics

Women’s ethical concerns about AI are far from unfounded. In addition to reproducing stereotypes, there’s increasing worry about the environmental impact of tools like ChatGPT. Training algorithms and cooling data centers require significant amounts of energy. Research shows that a search via ChatGPT consumes about 25 times more energy than a similar search on Google. Image generation is especially energy-intensive. Creating just one image can consume as much energy as fully charging a smartphone. If the system continues growing at its current pace, by 2030 it could consume around 3.5% of global electricity. Current AI models are far from energy efficient, and excessive use could further contribute to global warming.

Women are disproportionately affected by the consequences of climate change. Not only are they physically less likely to survive natural disasters, but they also experience more economic and social impacts, which can have long-term negative effects on gender equality as a whole. Women therefore have every reason to object to polluting tools such as AI.

Unlearning and Relearning

Fortunately, there is also good news. If an algorithm can be taught, it can—in principle—also be untaught. Scientists believe that tools like ChatGPT, if properly moderated, can actually help advance gender equality. AI use could challenge stereotypes and offer users new insights and ways of thinking.

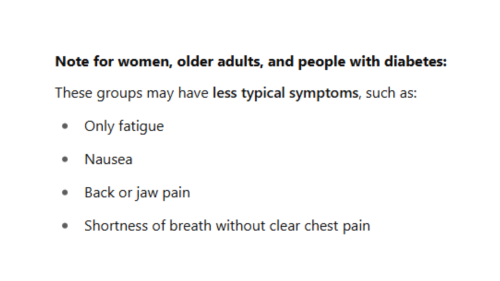

Luckily, we already found such a positive example during our brief investigation. Amazone asked ChatGPT to list the symptoms of a heart attack. Although this is a widely known medical condition, women often exhibit different symptoms than men and are therefore diagnosed later or not at all. ChatGPT clearly highlighted this and provided a list of symptoms specifically for women, older adults, and diabetics. With the right information, ChatGPT can indeed contribute to a more gender-equal society and help eliminate false stereotypes.

Image: When asked about symptoms of a heart attack, ChatGPT specifically listed symptoms for women, elderly and people with diabetes. © ChatGPT.

UtopAI: What Now?

It is clear that unlimited and unregulated use of AI will not benefit gender equality. At the same time, it is crucial to involve women at every stage of AI development and usage. Only then can existing stereotypes be challenged. A moderated and reflective use of AI, where the value of each result is critically examined, is a good first step. Learning how to use these tools and building awareness of their potential and pitfalls is essential.

Want to learn more about the impact of AI on gender? Start by exploring the sources below.

- How AI reinforces gender bias—and what we can do about it | UN Women – Headquarters

- Gender Bias In AI: Addressing Technological Disparities | Forbes

- Generative AI: UNESCO study reveals alarming evidence of regressive gender stereotypes | UNESCO

- Women Are Avoiding AI. Will Their Careers Suffer? | Working Knowledge

- Gender biases within Artificial Intelligence and ChatGPT: Evidence, Sources of Biases and Solutions | ScienceDirect

Sara-Lynn Milis

Projects & Resource centre